|

Hello! I'm a Postdoc at MIT with Prof. Tomaso Poggio.

I work on Machine Learning Theory and the mathematics to develop it.

|

|

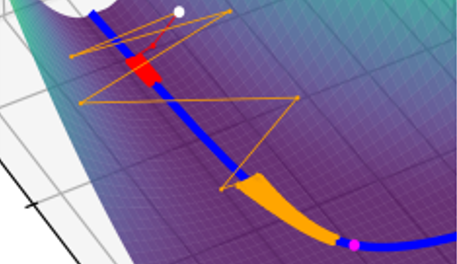

I work on the theoretical foundations of machine learning, particularly on deep neural networks and their optimization.

More precisely, in my PhD I studied (1) where optimization algorithms converge on general landscapes, (2) how hyper parameters affect that, and (3) if we can link this to changes in performance of the resulting models.

I recently started working also on the instabilities of training.

I'm also getting interested in certification of neural networks and a lot of other topics in machine learning theory.

If you're interested you can watch these talks of mine:

|

|

|

|

Edge of Stochastic Stability: Revisiting the Edge of Stability for SGD.

|

|

Does SGD Seek Flatness or Sharpness? An Exactly Solvable Model.

|

|

Gradient Descent Converges Linearly to Flatter Minima than Gradient Flow in Shallow Linear Networks.

|

|

How Neural Networks Learn the Support is an Implicit Regularization Effect of SGD.

|

|

On the Trajectories of SGD Without Replacement.

|

|

|

|

Deep neural network approximation theory for high-dimensional functions.

|

|

High-dimensional approximation spaces of artificial neural networks and applications to partial differential equations.

|

|

Sometime in my PhD I got interested in the ethical aspects of my job. I contributed to the organization of multiple events to raise the awareness of the community around the responsibilities of modelers and statisticians for the high-stakes decisions and policies that are based on their work.

See

CEST-UCL Seminar series on responsible modelling and check out our conference.

I was in the committee of the Princeton AI Club (PAIC), where we hosted a lot of exciting talks featuring Yoshua Bengio, Max Welling, Chelsea Finn, Tamara Broderick, etc. I spoke about the advent of AI and its impact on society in Italian public radio, at Zapping on Rai Radio 1, etc. |

Teaching

- Statistical Learning Theory and Applications -- MIT (Fall 2025)

- Senior Thesis -- Princeton (F 2024 and S 2025)

- Optimization -- Princeton (Spring 2024)

- Computing & Optimization -- Princeton (Fall 2023)

- Analysis of Big Data -- Princeton (S 2022 and 23)

- Energy & Commodities Markets -- Princeton (Fall 2021)

- Numerical PDEs -- ETH Zurich (Spring 2020)

- Computational Methods for Engineering and Applications -- ETH Zurich (Fall 2019)

|

Last modified on Jun 7th 2025. |

Template credits to Jon Barron! |